COVID-19, The Fight, and Folding@Home

- News

- Technology

Written by Dario Vianello, a Hybrid Cloud Architect at G-Research.

There are many ways to help the world deal with COVID-19 and its many repercussions throughout society. Many volunteer their time, some donate to charities supporting people struggling, and much more.

Finally, there’s a steadily growing amount of people donating computing power to quickly model some key proteins of the virus, with the Demogorgon taking the top spot. Stop your urge to go back and watch a Stranger Things episode for a second, as in this case we’re talking about the spike protein that the new coronavirus uses to attach to human cells – way more terrifying than the Stranger Things one. There’s of course a lot of studying of this protein going on right now, as interfering with the way it locks itself on our cells is seen as a possible way to stop it spreading. Knowing how the spike protein moves and interacts with its environment helps us understand how to block it best. But how do we go about simulating how a protein moves? With a ton of compute power, of course! More preferably, with a ton of GPUs.

Folding@Home

Folding@Home has been conducting scientific research by harvesting idle capacity from computers all around the world for a long time now, ranging from laptops all the way to HPC facilities and everything in between. People have contributed power for a lot of studies, but given recent events Folding@Home has decided to pivot most of the compute capacity onto SARS-CoV-2, the slightly less friendly name of the new coronavirus.

Technology Innovation

The Technology Innovation Group (TIG) in G-Research does research of a different kind. Our mission is to continuously scan the never-ending stream of new and innovative technologies being released into the wild to ensure G-Research has the best technology available for running the kinds of workloads important to our business. As you can imagine, this involves a lot of data processing and a lot of maths of different kinds. Sound familiar? To ensure we are in a position to be able to experiment with any new software or hardware, we have a lab comprising a group of workstations (used less during the peak of lockdown) and a server room with data-centre-grade cooling where we can rack more serious hardware. This is where we have various kinds of servers and more than a few GPUs. Sometimes they are being used to test new software libraries and sometimes they are part of an HPC simulation driving some stress testing of new storage, networks, or other hardware. Right now? Yes, they are trying to work out how to stop Coronavirus from spreading.

Joining the fight

It was about the 20th of March when someone in TIG stumbled upon the Folding@Home initiative supporting coronavirus research. Running it on your laptop is a fine and conscientious thing to do but it cannot compare to thousands upon thousands of GPU cores. So we thought… Why not use them to join the fight and do some protein folding?

If you are reading this, then you might be wondering if you or your business could do something similar. We urge you to act to find out. Every core helps. If, like us, you have a situation where you have spare capacity some of the time but you need to be able to prioritise business critical work at others, then read on… We have solved that problem.

Automation and Infrastructure as Code

We initially manually configured a Windows VM with a single V100 on OpenStack (yep, all those GPUs are now available through OpenStack) to quickly test the idea, and started folding in about an hour on a single GPU. That was cool, but we had three more idling GPUs to grab and we didn’t want to put all of them on one VM, as it would have meant stopping the whole show even if we just needed one of them for a PoC. Configuring four Windows VMs manually didn’t sound very efficient, so what’s next? Enter Terraform and Ansible!

It took us 53 lines of Terraform code to ask our OpenStack to build for us four Linux-based VMs with a V100 each. Actually, to be scientifically precise, only 18 of those lines deal with creating VMs, the rest were mostly networking. With the VMs ready, the next step was installing something, where we knew that something was essentially the Folding@Home client and some Nvidia drivers. Not a lot, but enough to not be done manually, especially as we knew there was a chance one of those cards would have been possibly needed for a PoC for a few days – destroy and rebuild, all by hand? No thanks!

G-Research has become a voracious user of Ansible, one of the few tools available that can, given a specification, configure a computer according to it (bit of a simplification, but let’s go with it nonetheless).

By this point we knew we needed “recipes” for at least Nvidia drivers and the Folding@Home client. A quick search on Ansible Galaxy – the equivalent of Google for pre-made Ansible recipes (called roles in Ansible parlance) – gave us a ready-to-use role from Nvidia to deal with drivers, but nothing really usable for the Folding@Home client.

We’ve got some experience in writing Ansible in the lab, so we spent some time knocking up a small role to install and configure the client – it’s quite easy as there are packages available out there (if not a bit clunky as the XML configuration file is actually rewritten by the client when it starts to add a few runtime).

If you’re an Ansible connoisseur you’ll know that this means that the next time you run your recipe (or playbook) the files won’t match, Ansible will rewrite it and that might cause reboots and other issues, depending on your playbook logic. Ansible can deal with injecting the XML key, and that might avoid this particular problem, but we’ve left that as the next thing to do for the time being.

Problem solving

We had the drivers, and had the clients, but were we ready to fold? Almost. This is where we had to spend a good hour scratching our heads with GPUs that seemingly picked up jobs from F@H but exited with errors within a few seconds of starting them. After a decent amount of Googling we found a post suggesting that we needed the ocl-icd-opencl-dev and ocl-icd-libopencl1 packages on the system to get GPUs jobs off the ground. Sure enough, after adding that package in our F@H role and run it we started seeing jobs running and succeeding!

COVID-19, we’re coming for you!

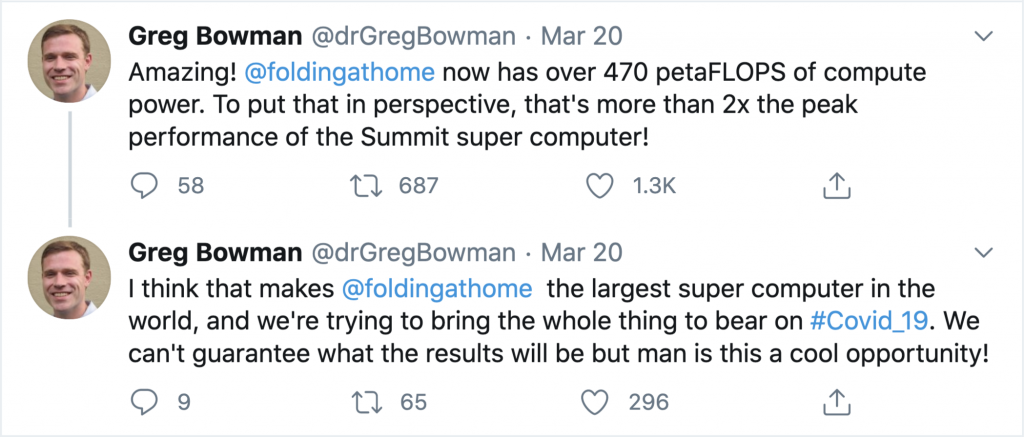

And boy oh boy, we were not alone. Coming end of March, Greg Bowman, Director of Folding at Home, takes to Twitter with this:

Well, isn’t that something?

It also became very obvious very quickly that we weren’t the only G-Research folks thinking about this, and there actually were other members of staff folding with their own beefy GPUs at home. Folding@Home allows users to group together in teams, and so the “G-Research – Fold today. Cure tomorrow” was born.

[If you are folding using hardware in your business, hunt around and see if there are others doing the same – gamification is always a good way to inspire people to greater heights! There must be more GPUs out there you can claim for this fight!]

Follow that link, and you’ll be able to find some stats on how many Work Units we’ve all together folded so far, and how we stack against the other in the world (sneak peek: we’re ~290th out of ~253,000). There are some real computing monsters in that list, including AWS, Google, CERN (details here) and NVIDIA itself. In fact, so many computing monsters that the project got into trouble a few times with overloaded servers unable to dish out enough work units to satisfy all the hungry CPUs and GPUs all around the word. In the end it seems Azure stepped in and offered free hosting for a few servers to overcome this (arguably nice to have) problem.

Be persistent, join the fight, and recruit others! Let’s crack this

We’ve now been chipping away at work units for c. 6 months, and as long as those V100s are available, we’ll keep going. The Infrastructure-as-Code exercise we did at the beginning has definitely helped us in re-provisioning our folding capacity as we moved it across different OpenStack VMs and a baremetal server to accommodate changing computing needs and PoCs in the Lab.

While we all hope we’ll soon be at a point where folding the Demogorgon will not be needed anymore, there’s plenty of other projects fighting other diseases (think cancer and Ebola, for example) where volunteered compute capacity will be turned to. If you fancy joining this worldwide (and world-class) effort, head over to the F@H website and download the client.