Written by Mark Burnett, Head of Technology Innovation Group, with contributions from Peter Wianecki, Engineer, and Peter Merrett, Infrastructure Compute & Storage Manager

G-Research is continually striving to identify and make the most of cutting-edge technologies available in industry. As is the case with many organisations, we have a significant estate with lots of features and functionality we want to enhance. New technologies need to be evaluated both on their individual merits and their ability to ‘play nicely’ with the rest of our tech-stack.

As such, we are always keen to build strong relationships with visionary vendors, especially where we can get forward visibility of their tech roadmap and influence it. Of particular importance to us is the ability to land new tech into our on-premises cloud native environment in a way that can scale massively with high performance. We are prepared to invest the time to understand where vendors are going with their roadmaps and, for the right technologies, we are prepared to share where we are going and our ideas for how we could work more closely together.

Challenge

We needed an enterprise storage platform that had effectively unlimited scalability and performance with minimal operational overhead. This was to support a large ecosystem of simulation, machine learning and analytics workloads driven by increasing richness and diversity, amounting to several tens of petabytes of data.

We were looking for a silver bullet; a platform that would better enable us to support the way we work today, as well as help accelerate our roadmap for the future. We wanted to do both of these things whilst also enabling a seamless transition from one world to another. We had a large Windows estate integrating many different architectures and technology stacks. We wanted to build a more uniform, flexible and fungible Kubernetes based Linux estate based on cloud native principles.

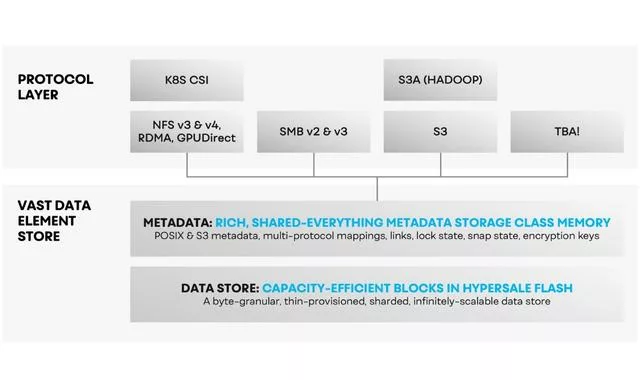

Part of the challenge was to enable a transition from SMB/CIFs to Linux and Cloud friendly protocols such as NFS and S3. We wanted views of the same data from different protocols, without compromising performance or security, nor having to knife-and-fork data back and forth to enable different systems in different stages of transition.

Put simply we wanted to have our cake and eat it.

Technology Research

Our Technology Innovation Group (TIG) assessed the storage platform landscape, selecting leading products from across industry to evaluate. We explored a wide range of solutions, ranging from specialist hardware appliances through hybrid architectures and pure software defined storage solutions that can be run on commodity hardware.

We didn’t want to limit ourselves to solutions that already existed and reached out to contacts in industry to find companies and start-ups working on next generation storage solutions. We ended up with a number of products, some mature, some still in development, and some where the start-ups were still in stealth. Each solution we invested time in had tremendous promise in its own right.

Selection Criteria

With many storage use cases it was necessary to keep an open mind as to the kinds of platform that could add value to our IT ecosystem. We created a number of proof of concepts based on different technologies using multiple benchmarks.

As part of this evaluation process we considered the following:

- Throughput performance for large clusters and individual clients

- Support for existing access patterns (SMB) and our future (NFS, GPUDirect and S3)

- Ease and flexibility of scalability in terms of capacity and performance

- Observability of system and per user metrics

- Data efficiency (compression and deduplication)

- Data protection (replication, snapshot including immutable snapshots)

- Granular security and access controls for data access and management

- A resilient architecture, mitigating the risk of a multi PB mixed use storage namespace

- Quality of support, both operational and in-depth technical

- Vendor development agility

- Truly non-disruptive upgrades for systems under constant load

Proof of Concept

We performed extensive tests against seven leading providers and concluded that VAST promised the best fit. Other vendor technologies do (or could still) have a place in our estate, but VAST was best suited as a scalable enterprise storage platform.

These early tests were on a minimum viable product principle, testing a small four node VAST cluster using limited client hardware available in our lab, consisting of HP DL360 gen10 servers:

- 2 x Intel Xeon Gold 6226 CPU 2.70GHz

- 192GB DDR4 RAM

- Dual port Mellanox ConnectX5 100G NIC

Focusing on performance and protocol, our testing was weighted towards file, both for single clients and in aggregate.

NFS versus SMB/CIFs

Aware for some time that our existing Linux kernels were not well optimised for SMB/CIFs, we first validated our direction of travel to NFS and specifically NFSv4.

VAST at this time did not support SMBv3, however, both VAST and our incumbent platform showed a marked improvement for NFSv3 read and write workloads, for both large and small IOs.

Reads were ~3x faster, writes ~2x and whilst SMBv3 multi-channel softened this disparity, this had already proven less reliable for us (in comparison to n-connect) when configuring our environment.

NFSv4 with Kerberos was also in VAST’s roadmap and this testing provided us the confidence to focus their dev effort to ensure it was ready for a deployment date in around six months.

NFS Single Client Tests

Standard NFS mounts do not perform well, with throughput up to 2 GB/s, even on a 100G NIC. With modern Linux kernels we could take advantage of nconnect and multipath:

- nconnect allowed control of the number of connections (network flows) between each NFS client and VAST node (up to a limit of 16)

- multipath allowed each NFS client to connect and balance load over multiple VAST nodes, leveraging the performance of multiple nodes and paths through the network

These mount options can be applied together for further improvement, then with the appropriate NIC driver support, RDMA can also enabled.

[wptb id="8876" not found ]

Figure 1: Performance improvements at each step, are compared to the baseline

Whilst it was no surprise to see RDMA performing at the top, demonstrating near line rate read performance, it was encouraging to see how close we could get with just nconnect and multipath.

Having really fast storage is great, but making use of the performance available is a multi-faceted challenge. RDMA was seen to require sophisticated client side drivers, network tuning and monitoring when deployed at scale.

VAST’s simple SMB, NFS and S3 access patterns, through to NFS with performance improving options (nconnect + multipath) enabled us to get near maximum performance, with minimal changes to the stack and at an acceptable CPU client penalty (no RDMA offload benefits).

Figure 2: VAST Data Element Store: A Multi-Protocol Data Abstraction

We think that using NFS with these options provides a great compromise between performance and ease of deployment for our early adoption phase without sacrificing the future promise of RDMA in future years, be this for general purpose or small accelerated projects.

Deploying to Production

These results, produced quickly by TIG, gave the Storage team the confidence to focus our efforts on ratifying VAST as a potential successor over our incumbent platform and so a second small system was delivered by VAST within a few weeks and deployed inside our environment. This meant integrations with core infrastructure and tooling could be assessed, along with its ability to support more realistic multi-client workloads.

As we established an understanding of the platforms’ internal workings, we were able to identify areas of concern requiring enhancements. So began an engagement of openness with respect to our strategy and success criteria. Our expectation was dedication from VAST to address our present and future needs, notwithstanding their commitments to other clients, which served to benefit us indirectly.

VAST set up multiple direct calls with some of these existing customers, allowing us to derive honest feedback from their prior experience. Our requirements were then detailed and prioritised with VAST, who committed to dated releases over the following two quarters. A dedicated ten person team was formed to expedite the development of kerberised NFSv4 and named “co-pilots” were aligned to our account.

Despite identifying no blockers, nor sensing a wavering in VAST’s resolve, the decision to commit to a multi-PB system (the largest we’d deployed, let alone in the first instance) was no minor step. This platform was needed within a tight timeframe, underpinning the parallel deployment of many thousands of CPU and GPU compute nodes (another substantial investment), which were expected to start their ROI within just a few months.

Confidence in G-Research’s storage engineers along with the relationships we’d established at VAST was important. However, critically the realisation that both companies were capable of making this work and clearly stood to gain so much, is what made a successful partnership.

Figure 3: A VAST Storage Array

Six months after our initial go-live the system is delivering ground breaking aggregate and single user performance, has headroom to deliver further within its existing footprint, and more again with targeted scaling. A second multi-PB platform has been deployed as a discrete namespace for research of a higher IP sensitivity, and a third multi-PB system will deploy in Q2 2023, supporting datasets beyond the original priority scope, dependent on cross-site replication and data protection through immutable snapshots.

The ways of working which were critical to this successful deployment included:

- Real time call home of anonymised system and user stats

- Direct from array upload of diagnostic bundles for detailed analysis

- VAST “co-pilots” dedicated to our account and assisting our engineers on a daily basis

- Shared Jira tracking of requests and issues

- Slack channels allowing direct collaboration as well as WebEx for screen share

- Alignment of our testing platforms for independent verification of reproducers and fixes

- Twice weekly cadence for informal project status updates, ensuring constant focus on priorities and updates on short- and medium-term releases

- Spin-off meetings to discuss complex issues or detail new requirements

- Routine onsite collaboration with senior VAST engineers at G-Research to witness Production workloads, with additional in-house metrics and expertise

- Senior management engagements and QBRs

Issues Encountered

With limited testing scale in our lab, it was inevitable we’d hit issues in production. We worked closely with the vendor to resolve each of them in turn.

Some of the issues we’ve seen include:

- G-Research’s scale (client machines and concurrent users) stretched the tested limits of earlier versions

- Even client distribution across C-Nodes relating to DNS TTL and “sticky clients”

- Single file open handle concurrency limits for NFSv4

- Data Reads IOs competing with metadata IOs from concurrent users

- Hot spotting of devices due to intense reads of freshly written data

Implementing a kerberized NFSv4 mount within the K8s Flex volume driver was also far more challenging than had originally been expected.

VAST quickly developed to match the spec of G-Research’s prior SMB implementation, but then committed to the long haul of debugging early IO and permissions issues in a multi-user, containerised environment, as well as maintaining this driver across future kernel upgrades.

Outcomes

Through our close collaboration with VAST – a truly valuable partnership – we have found that silver bullet; a platform that supports our efforts now, which will help to accelerate our roadmap for the future too.

Our key outcomes to date:

- We selected VAST Universal Storage as it provided the high throughput performance for multiple protocols, along with capacity and concurrency scalability with ease of operational management.

- G-Research has large-scale machine learning jobs running continuously from users across many teams. VAST support a truly non-disruptive upgrade: meaning we can capitalise on their development agility and proactively upgrade, whilst avoiding disruption.

- VAST recognise that G-Research can stretch its systems to its limits, will invest the time to isolate issues, re-test improvements and feedback in detail to support and direct their development effort.

- G-Research trusts that VAST have architected a scalable and operable system, and have the engineers needed to advance it based on our observed usage and feedback.

Lessons Learnt

1. Pace yourself

It was important to select a strategic storage platform that could enable our architecture transformation ambitions at enterprise scale and support us into the future. This was a long-term play. We carefully evaluated many solutions, exploring features, performance, and operational ease of use.

2. Think partnership rather than vendor

We worked closely with vendors to understand not just how their product worked but also their vision for where they wanted to take it. We had our own technical requirements and constraints that complicated product selection. Once we down-selected from the long list of technologies based on capability to meet our needs and future vision, we started building closer relationships. This is where we put a lot of effort into providing constructive feedback on what product features would make their products more adoptable to us.

Summing up

Sustainable businesses are built on sustainable technology strategies and these inevitably require strategic partnerships that go beyond mere vendor relations.

We are proud to have developed some strong relationships in industry and even for technologies we have not adopted yet, the work to find the right ones for our other use cases continues. Finding ways to create strategic alignment is rarely easy. Every business is different and has different strengths and peculiarities. The trick is to embrace the fact of both and build relationships that work together to enable customer use of technologies, products, or services, to help inform development of those by vendors.